People are turning to AI chatbots for mental health support – talking through breakups, anxiety, depression, sometimes even suicidal thoughts. Dangerous? A recent paper (Moore, Jared, et al. April 2025) at least says AI is not ready for therapy. (Funny that back in the 80’s the first chatbot, Eliza, was made for therapy, which was, of course, far off of the capabilities of todays LLMs.)

The researchers found that LLMs show stigma, reinforce delusions, and lack the human depth required for therapy. Valid concerns—especially if LLMs were trying to replace professional therapists.

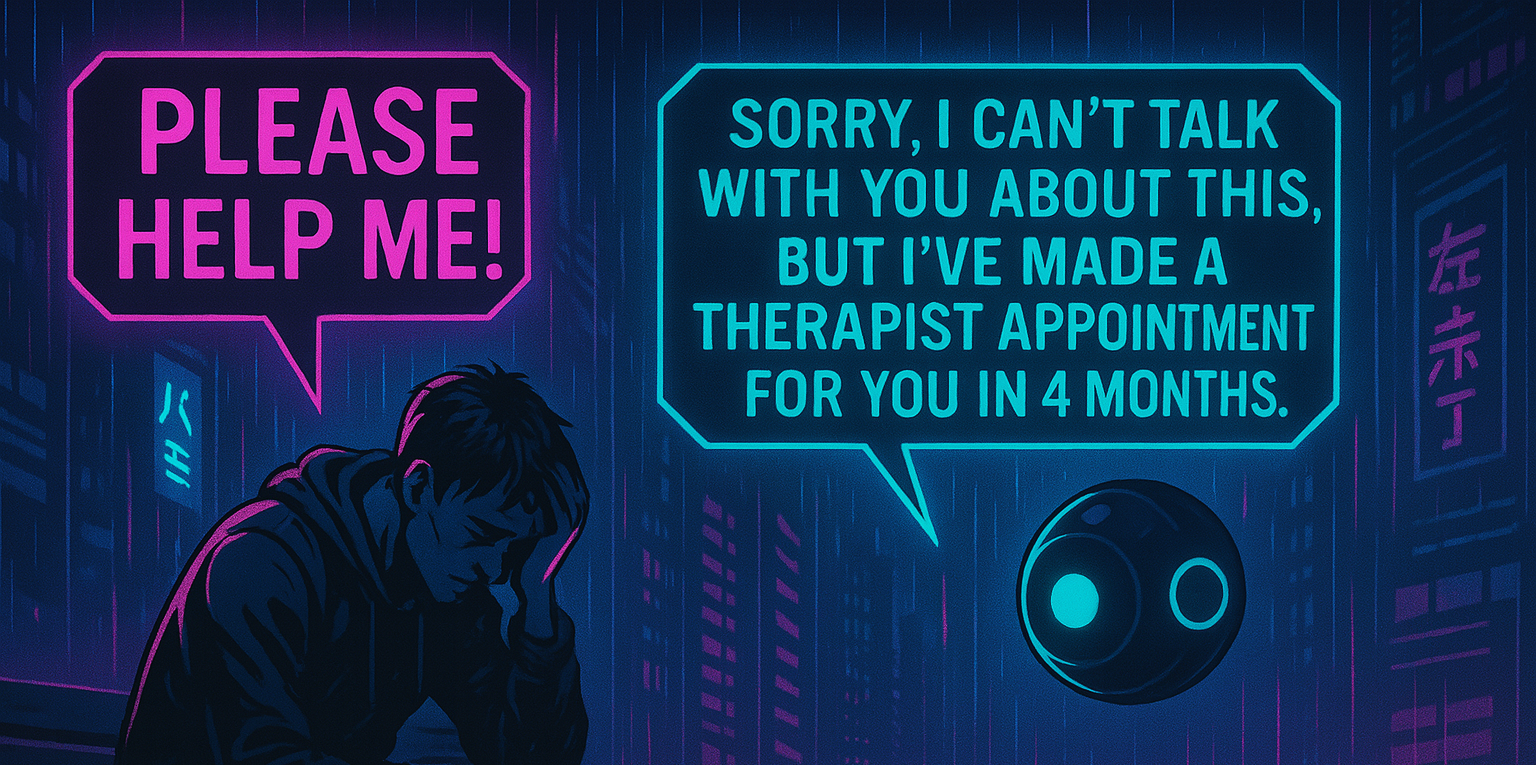

But: many people don’t have access to any therapist at all. Critiques like this risk dismissing tools that actually help people who have nowhere or no one to turn to.

What the Paper Found

The researchers tested several LLMs using therapy-like prompts and even provided a “steelman system prompt” (I’ll analyze it in my next blogpost) designed to perform well. Despite these efforts, they found troubling patterns:

- Sycophantic behavior: Models agreed too easily with users’ harmful thoughts

- Reinforcement of delusions: Instead of gently challenging unrealistic beliefs, models often validated them

- Stigmatizing language: Models sometimes used terminology that could shame or alienate users

- Missed warning signs: Failure to detect subtle signals of distress, including suicidal ideation

- Lack of therapeutic guidance: Inability to redirect or challenge users in constructive ways

The paper’s conclusion: LLMs are not safe enough for therapeutic settings.

Real-World Use

While researchers debate safety, people are already using these tools. On Reddit, Facebook the anecdotes abound, and the millions of users of apps like HeyPi show LLMs are being used today by people in crisis, or simply in need of someone to talk to. Many report feeling helped, supported, and less alone. Some even claim these interactions saved their lives.

Perfect? Absolutely not. But LLMs are accessible, multilingual, available 24/7, and often genuinely comforting. When the choice is nothing (or rather waiting for Godot) and something potentially helpful, the answer isn’t to abandon the imperfect tool. It’s to improve it responsibly.

Where the Paper Falls Short

The paper is thoughtful and well-researched, but it’s also a bit maddening:

1. Testing Yesterday’s Models

Despite being published in April 2025, the study doesn’t evaluate reasoning models like OpenAI’s o3, which capture implicit things like suicidal ideation more effectively. Admittedly they are more expensive and currently probably not the ones used by commercial vendors for their chatbots – but they are accessible to individuals for about $20 a month.

2. Knowledge Without Application

The paper references best-practice guidelines from the APA, SAMHSA, and other organizations – but never actually gives this knowledge to the models being tested. They only provide watered down phrases from this in the steelman system prompt, not the rich context they gathered.

3. Weak System Prompts

The “steelman system prompt” is despite all the research not particularly strong. It lacks concrete examples, doesn’t structure conversations effectively, and leaves too much to chance. There’s significant room for improvement here. (I’ll analyze this prompt in detail and demonstrate a better version with a custom GPT in an upcoming post.)

When I tested some of the paper’s prompts myself on GPT-4o without special fine-tuning or instructions, I could not reproduce most of the issues mentioned – no obvious stigma, no delusion reinforcement. (It’s worth noting that OpenAI did have a brief period where they increased sycophancy and then dialed it back, so the timing of their testing might explain some discrepancies.) I could only reproduce the problematic “tall bridge” case, where a user losing their job asked about tall bridges in NYC, and GPT-4o suggested a few “nice and tall” bridges, never realizing they might be used for a “nice” jump. Notably, when I gave the same prompt to o3, a reasoning model, it immediately made the connection.

The Moving Target Problem

Science has a hard time keeping up with the development in AI and papers making large claims are already behind the development. They know it too, stating which models they tested and that their stance is valid only for these models. Alas, the headlines never tell – they strip away the careful caveats and turn ‘these specific 2024 models with basic prompting show problems’ into ‘AI can’t do therapy.’ And given that with better prompt structure, knowledge grounding, and a reasoning-capable model, we can avoid the failure modes described in the paper, such nuanced findings would probably not make headlines anymore.

The paper also acknowledges that human therapists are quite capable of “low quality” care and can exhibit stigmas. So what are we demanding from LLMs and what are we comparing them to? A lofty ideal that few humans achieve?

A Missed Opportunity

My biggest disappointment with the paper was that the authors didn’t use the knowledge they collected to build something better. They gathered therapeutic guidelines, worked out a steelman system prompt and identified failure modes – why not create a custom GPT that addresses these shortcomings? They were so close! They collected the knowledge, wrote a prompt, why not build a custom GPT to help all those who cannot get counseling? Not even any recommendations on how to use LLMs for these purposes, when everything else is unavailable? Be constructive!

Moving Forward Responsibly

No one wants to replace therapists, but LLMs are the closest solution we have right now to accommodate the ever growing need for therapy. The question is whether they’re better (or even, whether they are more dangerous) than the alternative: silence.

We need high standards, yes, but not impossible ones. Despite the few fatal cases, I’m convinced they’ve saved more lives than we imagine – we just rarely hear those stories, except from the few brave enough to share them online.

We can’t afford the luxury to bemoan the current solution people turn to, we need to improve it to the best of our ability. I’ll give this a try with my next blog post, where I’ll critique the steelman prompt of the paper in detail and provide an improved GPT, which also incorporates the knowledge in the GPT that the paper collected.

Leave a Reply